Table of Contents

Kubernetes, the open-source container orchestration platform, is becoming a cornerstone technology for fast-growing companies looking to scale their AI and machine learning (ML) capabilities. With its flexibility, scalability, and powerful automation features, Kubernetes is uniquely suited for handling the resource-intensive workloads of AI applications, enabling companies to manage and deploy AI models at scale efficiently.

Why Kubernetes for AI?

Kubernetes was initially developed to manage containerized applications, but it has rapidly evolved into a critical infrastructure layer for AI-driven companies. By orchestrating containers, Kubernetes allows developers to deploy, scale, and manage applications seamlessly across clusters of servers. This makes it an ideal choice for AI and ML workloads, which often require substantial computational resources, frequent updates, and rapid iteration.

With Kubernetes, companies can streamline the deployment of AI models across cloud, on-premises, and hybrid environments, making it possible to support large-scale AI applications without the need for dedicated infrastructure. This flexibility is invaluable for fast-growing businesses that need to deploy AI models quickly to stay competitive.

Key Benefits of Kubernetes in AI for Fast-Growing Companies

1. Scalability and Flexibility

One of the biggest advantages of Kubernetes for AI is its ability to scale resources dynamically. AI workloads are resource-intensive, often requiring bursts of compute power to train models and run complex data analyses. Kubernetes enables companies to scale up or down based on demand, ensuring that resources are available when needed and minimizing costs during periods of low demand.

For fast-growing companies with fluctuating workloads, Kubernetes provides the flexibility to deploy and manage AI models across multiple environments. With Kubernetes, developers can use a unified platform to deploy AI models on cloud services like Microsoft Azure, AWS, and Google Cloud, or even on-premises, maintaining consistency across different infrastructures.

Andrew Lee, CTO at a leading fintech startup, explains, “Kubernetes has allowed us to deploy AI models at a much faster pace. The scalability means we’re not constantly overprovisioning resources, which is vital for a startup that’s growing quickly. We can add more capacity as needed without interrupting our operations.” (Source: TechCrunch)

2. Cost Efficiency Through Resource Optimization

For companies scaling rapidly, controlling costs is critical. Kubernetes offers advanced resource management features, allowing businesses to assign resources to specific tasks and prevent costly overuse. By running AI models in containers, Kubernetes enables companies to avoid the high costs of managing dedicated AI infrastructure, instead leveraging shared resources.

Furthermore, Kubernetes supports autoscaling and load balancing, helping companies optimize their infrastructure. This means that when demand spikes, resources are automatically allocated to handle the load, and when demand drops, resources are released. This flexibility is particularly beneficial for companies experiencing rapid growth, as they can optimize their spending on cloud resources.

I recommend you watching this Kubernetes autoscaling 101 by Stackrox:

3. Rapid Iteration and Deployment

Kubernetes enables fast-growing companies to adopt agile practices, with rapid iteration and deployment cycles that are essential for AI development. AI models frequently require updates based on new data or changing business requirements, and Kubernetes allows for these updates to be deployed quickly without disrupting existing services.

Using Kubernetes, data scientists and developers can iterate on models, train and test them in isolated environments, and deploy updates without delays. The platform’s container-based approach ensures that different model versions can be tested and deployed in parallel, reducing the risk of downtime and improving model accuracy over time.

Jessica Rivera, Head of AI at a logistics tech firm, says, “Kubernetes lets us run experiments with different model versions and deploy updates seamlessly. We can A/B test models across regions without significant operational overhead, allowing us to innovate and adjust our approach as our needs evolve.” (Source: Forbes)

4. Simplified Management of Complex Workflows

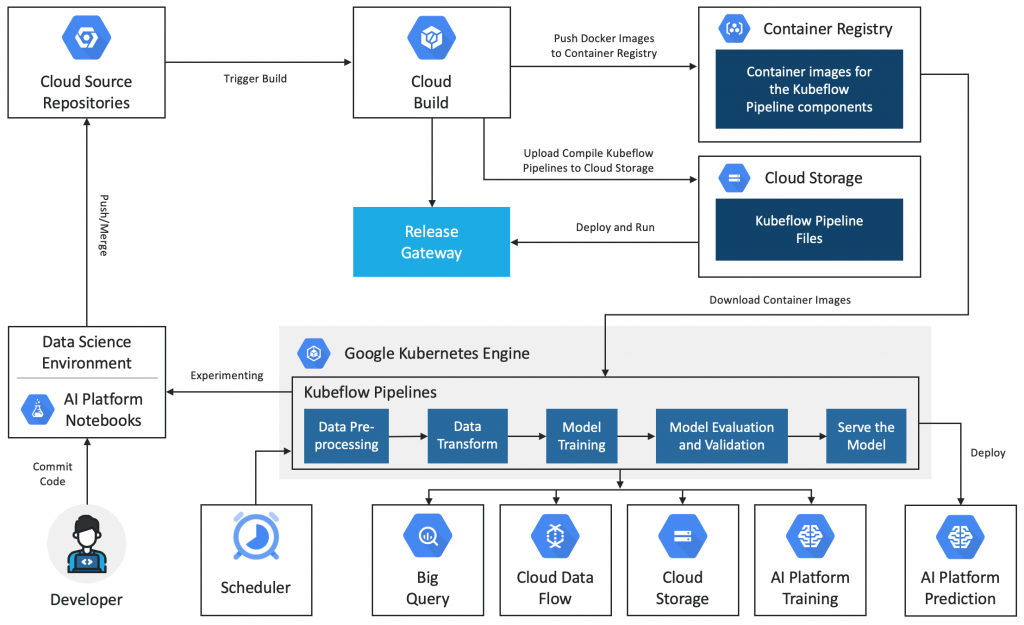

AI workflows often involve multiple stages, from data preprocessing to model training and inference. Kubernetes supports workflow orchestration, which allows companies to automate these complex pipelines. Tools like Kubeflow have been built on Kubernetes to specifically support ML workflows, providing a suite of tools for model training, deployment, and monitoring.

Watch this orchestration explained by IBM to learn more:

For example, Kubeflow Pipelines, an orchestration tool, enables data scientists to create repeatable, end-to-end workflows for ML. This automation saves time, reduces errors, and ensures that models can be consistently deployed and managed across environments. Fast-growing companies, particularly those with limited data science resources, can benefit from this streamlined workflow management, which reduces the manual work involved in scaling AI projects.

Kubernetes in Action: Success Stories

1. E-commerce Personalization

Fast-growing e-commerce platforms are using Kubernetes to deliver real-time personalization and recommendation engines. By orchestrating containerized ML models, these platforms can analyze customer behavior in real-time, generating personalized product recommendations and dynamic pricing adjustments. Kubernetes enables the rapid deployment of these AI-driven personalization engines, which are crucial for retaining and engaging users in a highly competitive market.

2. Financial Fraud Detection

In the financial sector, startups and fintech companies are adopting Kubernetes to manage fraud detection models that analyze transaction data in real time. With Kubernetes, they can deploy multiple versions of fraud detection models simultaneously, testing and improving them with live data. This approach ensures that fraud detection systems remain accurate and adaptive as fraud tactics evolve.

Samir Patel, VP of Data Science at a fintech firm, explains, “Kubernetes allows us to test and deploy fraud detection models rapidly, which is essential for protecting our customers in a fast-paced industry. We can adjust models on the fly, and the containerization means we’re not limited by our infrastructure.” (Source: Finextra)

3. Healthcare Data Analysis

Fast-growing healthcare companies are using Kubernetes to process and analyze massive datasets, supporting AI applications in diagnostics, treatment recommendations, and patient monitoring. Kubernetes allows these companies to scale their data processing capabilities while maintaining strict regulatory compliance by enabling hybrid and multi-cloud environments.

Overcoming Challenges with Kubernetes in AI

While Kubernetes offers significant benefits, implementing it for AI does come with challenges. Setting up Kubernetes for AI requires expertise in container orchestration, and for companies new to this technology, the learning curve can be steep. Additionally, AI workloads can be unpredictable, demanding specialized infrastructure configurations that may not align with traditional Kubernetes setups like shown in this diagram.

To address these challenges, many companies leverage managed Kubernetes services like Azure Kubernetes Service (AKS), Amazon Elastic Kubernetes Service (EKS), and Google Kubernetes Engine (GKE), which simplify deployment and reduce operational complexity. Managed services also offer built-in scalability, security features, and support, allowing fast-growing companies to focus on scaling their AI initiatives rather than managing infrastructure.

Looking Ahead: Kubernetes as a Foundation for Scalable AI

As more fast-growing companies adopt AI, Kubernetes is becoming essential for deploying, managing, and scaling complex models in a cost-effective and efficient way. With its powerful orchestration capabilities, Kubernetes offers a pathway for these companies to manage large-scale AI workloads and respond quickly to evolving business needs.

Kubernetes’ compatibility with multi-cloud environments and hybrid setups also future-proofs it as a solution for scaling AI. Companies that adopt Kubernetes today are better positioned to handle the increasing demands of AI in the future, making it a critical part of any fast-growing business’s AI strategy.

For more information on Kubernetes and its applications in AI, visit the Kubernetes documentation or explore tools like Kubeflow for ML workflows.

Franck Kengne

Tech Visionary and Industry Storyteller

Read also

November 19, 2024

November 19, 2024

November 19, 2024